In a distributed architecture, how do microservices communicate with each other? For the traditional browser client model, JSON and XML is the answer. But is that really optimal if you have control over both the client and the server? The overhead of encoding the strings, sending them over networks, decoding them, parsing,etc. does not work at scale. The solution: gRPC and protobuf.

gRPC is a high-performance, open-source framework for Remote Procedure Call (RPC). It allows you to call a procedure (think function / method) on a different address space, e.g. a remote computer on a shared network (think another microservice), as if it were a local procedure call. The programmer (think you or me) need not worry about the explicit details of the remote interaction.

The network communication happens via binary data over HTTP/2. This is way more efficient than JSON and XML.

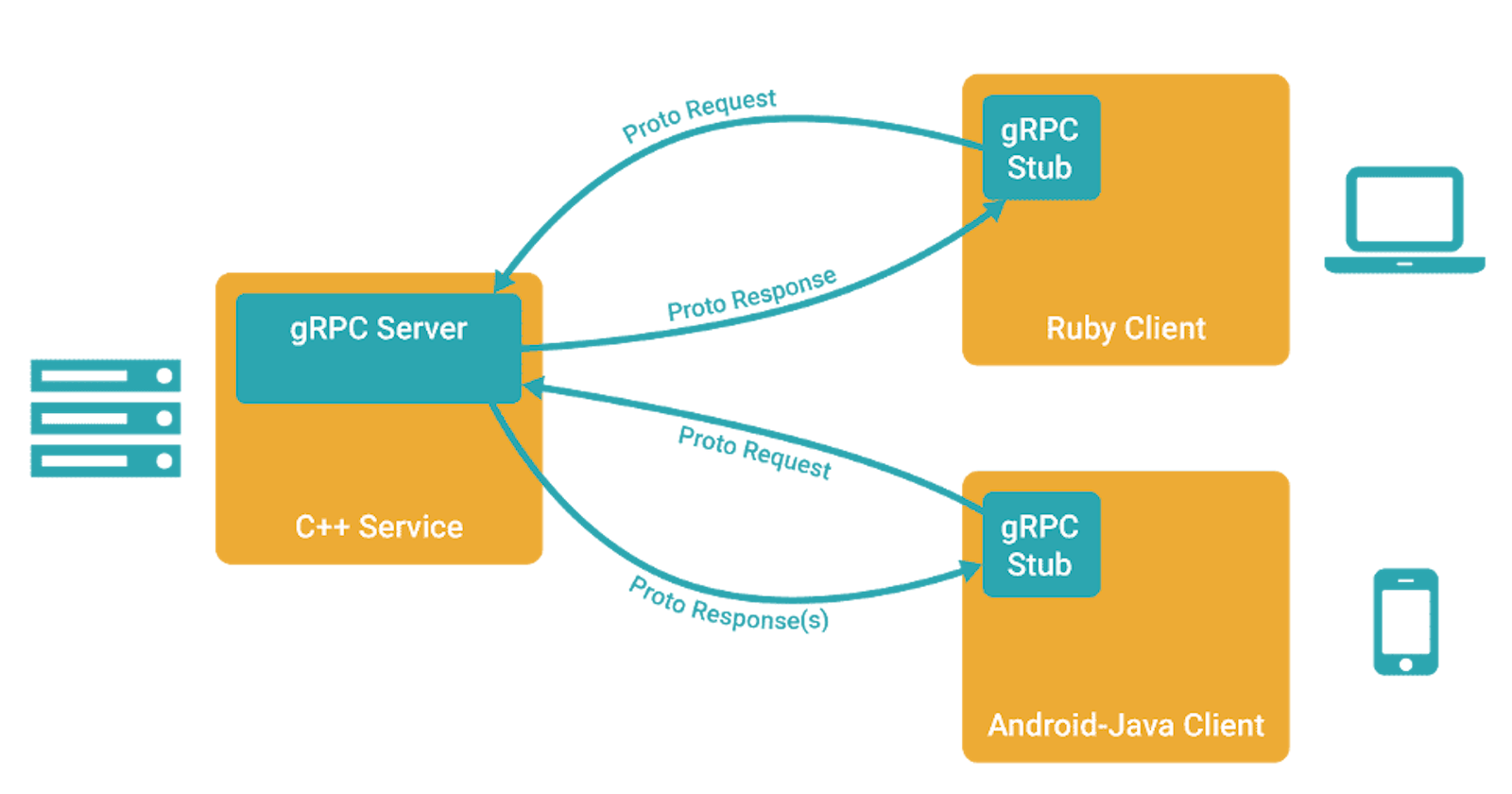

The caller of the procedure is known as the client and the executor of the procedure is known as the server. These procedures can be called in any gRPC supported language and executed in any gRPC supported language which need not be the same as the calling language. gRPC will take care of all the stuff behind the scenes for you.

protobuf is what gRPC uses to convert your data objects to and from binary, define those data object as well as convert them into datatypes in your language of choice. These data objects are defined in a .proto file.

If you want to sound smart on a coffe table, say protobuf is an Interface Definition Language (IDL) and binary serialization/deserialization library.

To set up a gRPC client and server, they will have to agree on shared procedures and the datatypes to receive and return. This is defined in a protobuf definition file.

.proto is the extenstion of a protobuf definition file which defines the rpc services (think remote procedures to be called), and the datatypes to be passed and received in the services. Here is what a simple .proto looks like:

syntax = "proto3";

package cats;

service CatsService {

rpc GetCat(GetCatRequest) returns (Cat) {}

rpc AddCat(AddCatRequest) returns (Cat) {}

}

message GetCatRequest {

string id = 1;

}

message Cat {

string id = 1;

string name = 2;

string sex = 3;

uint32 age = 4;

}

message AddCatRequest {

Cat cat = 1

}

Our gRPC client will be calling GetCat and AddCat methods (in their language of choice). The server will have the implementation of these methods (in its language of choice). But all the clients and the server must pass and return datatypes as laid out in the .proto file.

Here is short breakdown of the above .proto file:

- syntax: current version of proto IDL

- package name: best practice to prevent name clashes

- service: rpc functions need to be wrapped in a service. protobuf compiler will generate an interface and stubs from this service.

- rpc method: specifies method name and request and response Message. protobuf and gRPC support async streaming methods which is even cooler (let's not worry about that in a 101)

- message: datatype passed to/returned from the RPC function. Required. message field: field type and field number. The field number is required and is used in binary serialization and deserialization

The .proto file is converted to your language of choice with protoc which is the protobuf compiler. There are various language specific plugins to aid the protoc in this task. In my language of choice, Go, the protoc creates a .pb.go file. The CatsService will be converted into an interface which must implement GetCat and AddCat methods. The messages are converted into structs.

The server code can then implement the methods, and the client code can call them - in different address spaces.

Et voila!

There is much more to microservices, but you now have a clearer understanding of how various microservices work together in a distributed architecture by efficiently communicating via gRPC.